AI Intelligence Robotic Pet

Send Inquiry

Main Overview

Based on the AI big model, build delicate emotional interactions that can be perceived by consumers

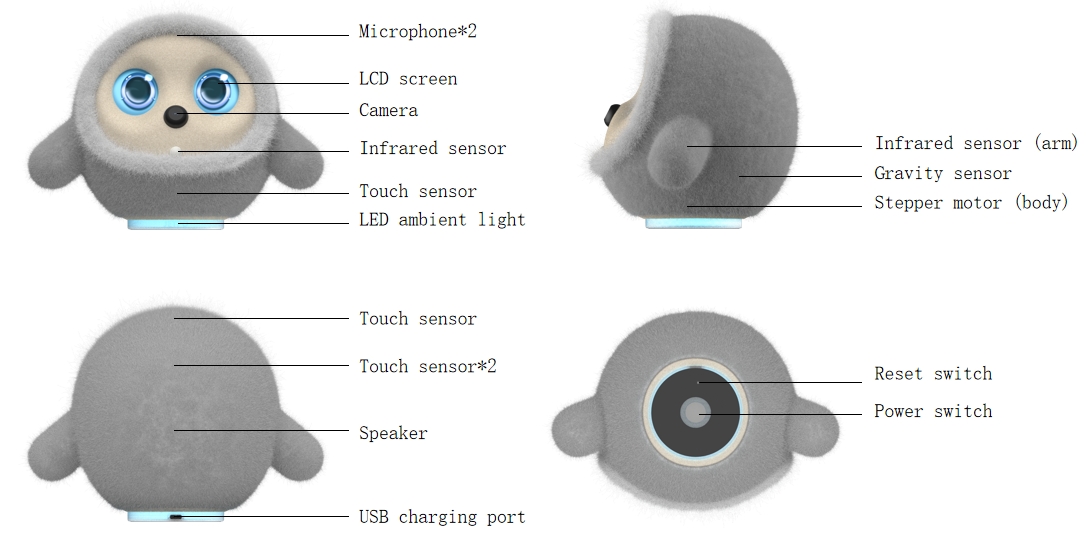

Situational Awareness:

Through the touch sensors, microphones, cameras, and infrared detection sensors covering the head and back, it can perceive the surrounding environment and interact with the surrounding environment.

Situational Understanding:

Fusion of voice input and visual input (focusing on static frames), using a "text-image multimodal

large model" to output conversations

Infrared detection sensors and microphones sense biological activities and actively interact with

users

Emotional Expression:

Multimodal emotional expression output through body and hand stepper motors, LCD display (eyes), and speakers

Placement and Carrying Method:

Desktop, bedside display, hug

Usage Scenarios:

Relatively fixed location, weak mobility requirements, long-term connection to the charger

· Able to understand natural language, actions, and the dynamic physical world

· Able to express through natural language, mechanical movement, and electronic animation

· No ability to express emotions

· No cute appearance and feel

User Journeys and Their Physical Basis

Personality

Voice adjustment

Personality adjustment

Practical functions

News search

Knowledge popularization

Life assistance

Active perception

Sound sensor

Drop sensor

Entertainment Instructions

Playing games

Telling stories

......

Standby action

😪drowsiness

🤨looking around

......

Pet interaction

Snoring

Patting my head

......

Special emotional expressions

Blinking eyes

Calling/slapping awake

......

Rely

Sleeping together

Playing together

Keeping each other company

Emotional expression

😪 Shy

😢 Grievance……

Special standby action

Charging

Full battery

Natural language dialogue

Memory

Commands to trigger conversations

Active perception

User leaving/returning home

|

ASR + LLM Visual image understanding Touch events Infrared timer Dialogue memory Character settings |

| Networked cloud-based large-scale model platform (Multimodal perception, large language model, conversational memory, network query) |

| Multimodal perception and control system layer | |||

| Scene understandingTrigger control | Lighting Control | Motor Control | Display Control |

| Hardware embedding layer (robot body) | ||||

Scene

understandingTrigger control Scene

understandingTrigger control

|

Lighting

Control Lighting

Control

|

Motor Control Motor Control

|

Display

Control Display

Control

|

Display

Control Display

Control

|

Environmental perception module

| Device | Specifications/Features |

| Microphone | Supports far-field voice recognition (5-meter range) and directional sound pickup for receiving voice commands. |

| Camera | Used for environment and object recognition. |

| Infrared sensor | Used for low-power wake-up triggered by human body/pet. |

| Touch sensor | Distributed touch modules (head, back, abdomen) are used to detect stroking and patting (such as "touching the head" and "tickling"). |

| Gravity sensor | Sense the body's motion state and trigger a "distress signal" (such as a voice message "I fell and it hurts") when the product falls. |

Human-computer interaction module

| Device | Specifications/Features |

| LCD expression screen | 4.28-inch LCD screen (eyes), supports dynamic expression display and binocular display (such as visual feedback when "playing dead" or "acting cute"). |

| Speaker | Mono/4Ω, 5W full-range speaker (such as voice output when "singing a song" or "telling a joke"). |

| Full-color LED light strips | Colored light strips that play relevant "emotional state" lighting effects or can be used as indicator lights. |

Motion control module

| Device | Specifications/Features |

| Stepper motor | Dual motors for waving and turning the head (waist) |

Data processing and communication module

| Device | Specifications/Features |

| Main control chip | V821: basic function control, voice processing, binocular asynchronous display |

| Wi-Fi / Bluetooth | 2.4G Wi-Fi + Bluetooth |

| Storage Unit | NAND FLASH 256MB, 64MB DRAM |

Power endurance and modular modules

| Device | Specifications/Features |

| Lithium battery | 3000mAh capacity/7.2v, supports fast charging, 2 hours of battery life, and 2 days of comprehensive standby; equipped with a power detection chip. |

| Charge | USB type-C |

| Accessory modules | Different styles of clothing and accessories can be changed |

Emotional expression:

Set the emotion expression:

Set the emotion expression:

20 emotional eye settings

| angry | rolls its eyes | faint | act cool |

| enthusiasm | sad | awkward | shy |

| laughing out loud | smile | heart eyes | standby |

| sleep | cute | wronged | Sun Wukong |

| daze | curious | cross-eye | evil |

Set the emotion sensing method:

Application scenarios: